We can only see a short distance ahead, but we can see plenty there that needs to be done. - Alan Turing

This article is written in the younger days, for latest BP of AI, go and see BP of VibeCoding

Unleash the power of AI

Open AI GPTs are powerful, but how to unleash its power in our daily dev work need some insights. As a frontend(full-stack) developer, I summarize some best practices for AI Driven FrontEnd Development tips & patterns based on my daily work and research.

Hope such experience share can help! And here we go ~ 🚀

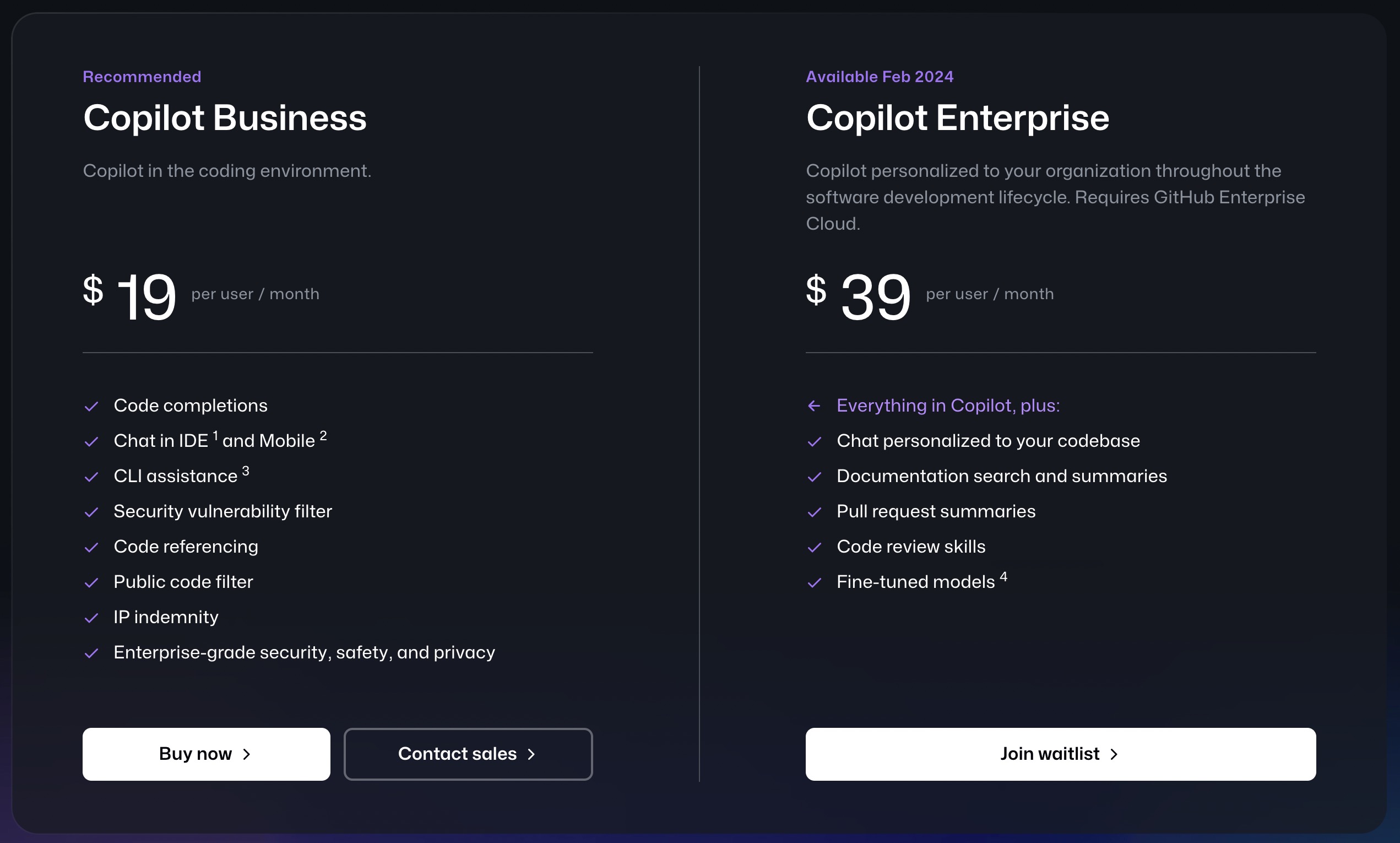

Github Copilot

Use it without any hesitation. 😎

🤖 Github Copilot have already shown us the power of AI in our daily work. It's a great to work as a pair in the fllowing ways with its paid features:

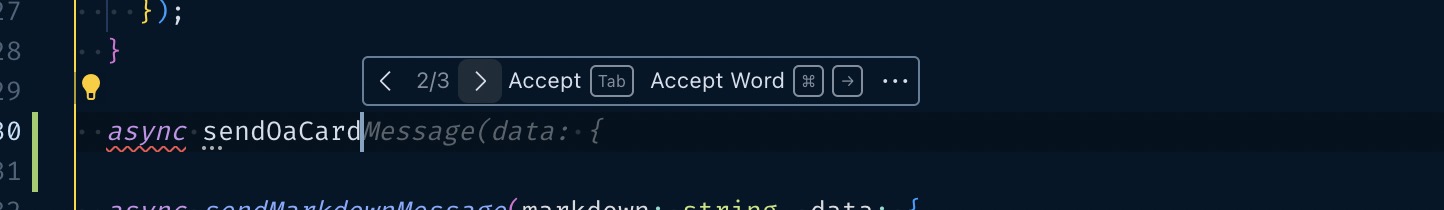

- Direct code completion: when you type code, it will suggest you the code completion according to your context, this is the most useful feature for me, it works so naturally and I can focus on the code logic instead of the code syntax.

:::

Direct code completion can use multiple choices to select the best one, but it's not always the best one, so you need to be careful with it.

:::

- Write tests with Github Copilot Chat feature, you can use

/testcommand to ask Copilot to write tests for you, it's a great way to write tests, but you need to be careful with it, because it's not always the best test cases, you need to review it and modify it to make it better. - Explain code if you have doubts about the code, you can use

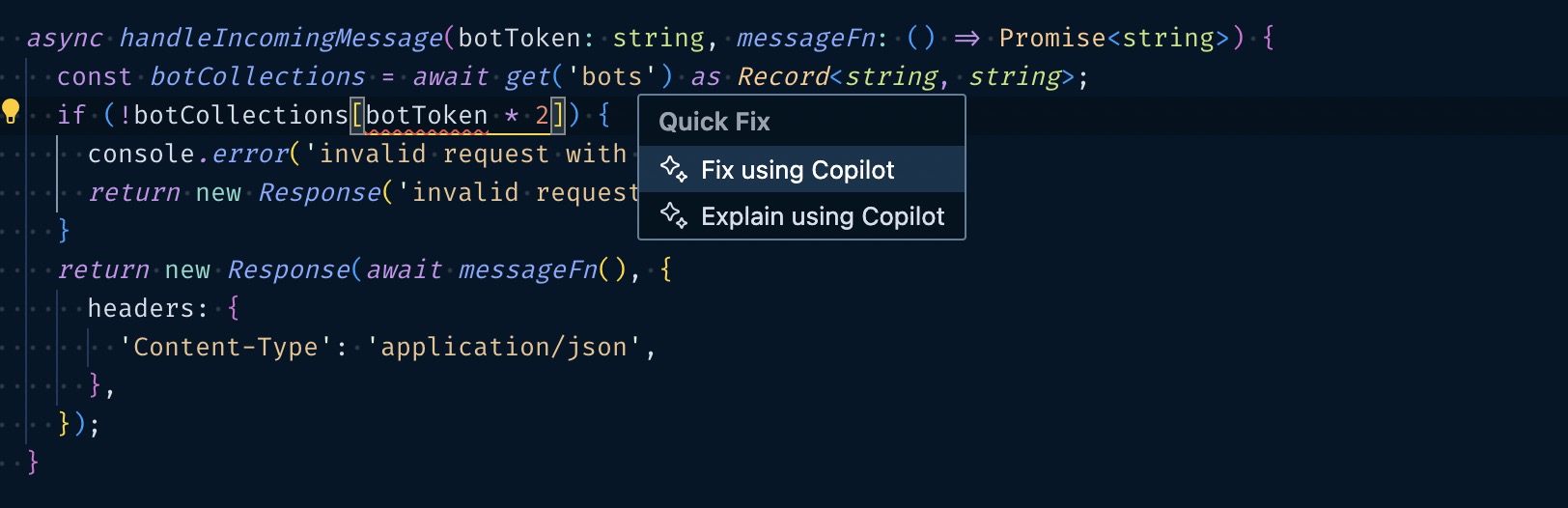

/explaincommand to ask Copilot to explain the code for you, feel natural and useful. - Fix bugs and errors you can fix bug and error especially about typescript errors with single hover on the error and click the

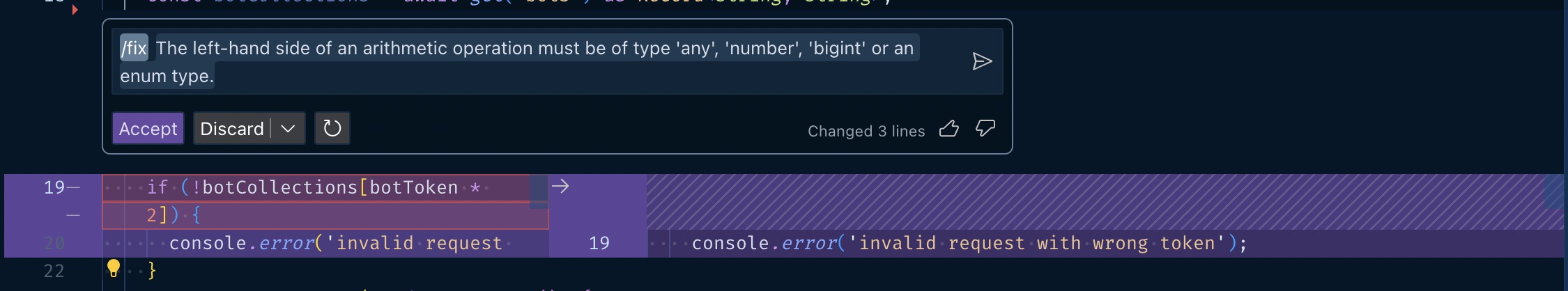

Fixbutton.

:::

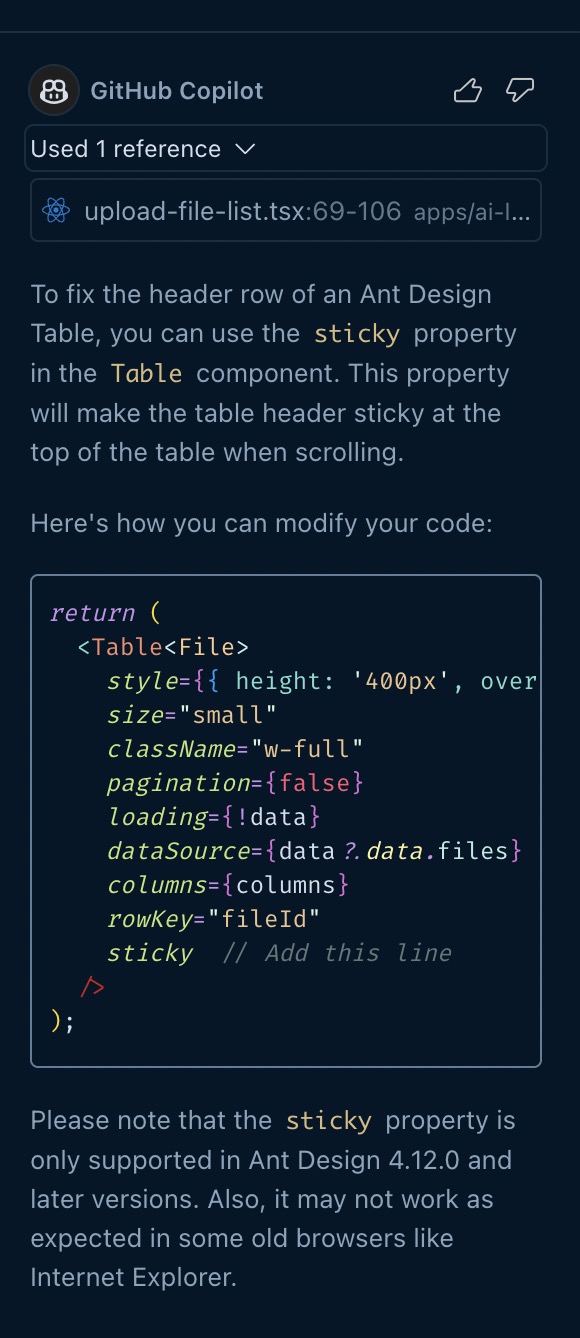

Show the error hint and fix with Copilot:

Fix the error with Copilot:

This works out so well for me, especially for typescript errors. AI can find its way ~

:::

- Chat with your code context: in your editor, you can chat to the code you selected as context, or to your current file and even code language server APIs, they man be caught by Copilot and used as context to generate code for you.

- Enterprise Level Features: may contain more features to integrate with:

- CLI enhancement: for code commit, command suggestions like

Wrapdid. - Code and information security: for code security concerns of Business.

- Support embedding and use your own code and documents as context to perform tasks above, so you can Chat to your documentation and your code-base, that's quite useful for some conditions when you want to gain more insights from your code-base and documentation.

- Fine-tune the AI model to fit your business needs.

- CLI enhancement: for code commit, command suggestions like

If without the enterprise level features, we can implement it via vscode extension to access the related code context and handle it to OpenAI or other API service vendor to generate content we need in a cheaper way.

Code Generation Patterns

Before we start, I have to say: Do use latest and strongest model, like GPT-4 series to solve your dev problem and upcoming code-generation patterns. Compared with gpt-3.5, it is more reliable, more powerful!

Sometimes when the code context become more complex, we need to describe the requirements in a more structured way to generate code, here are some patterns:

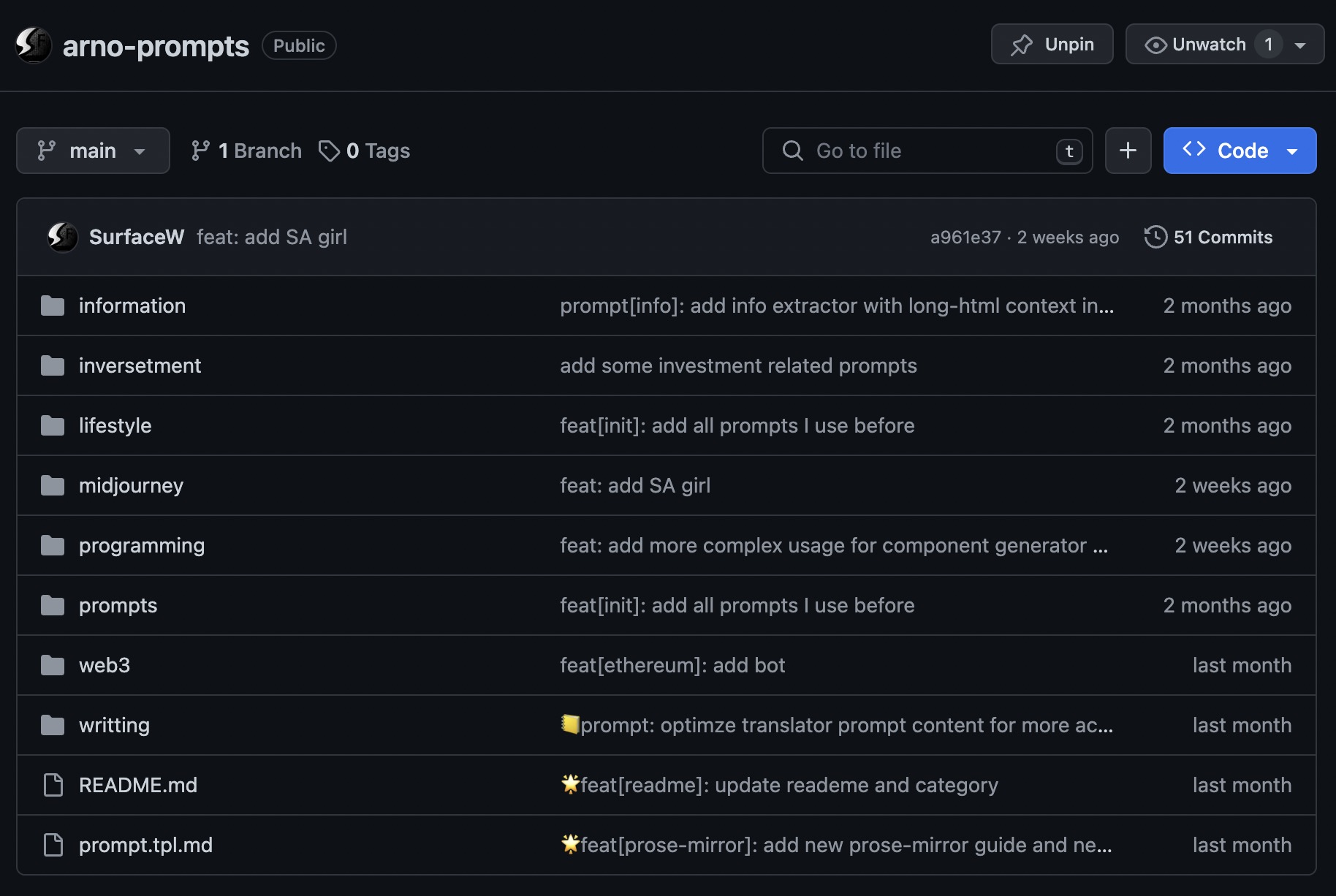

- Maintain your own prompt garden in Github: you can edit your prompt with

giton Github.- my prompt garden is here: 🌟 Arno's Prompts, maybe, you can get inspired from it.

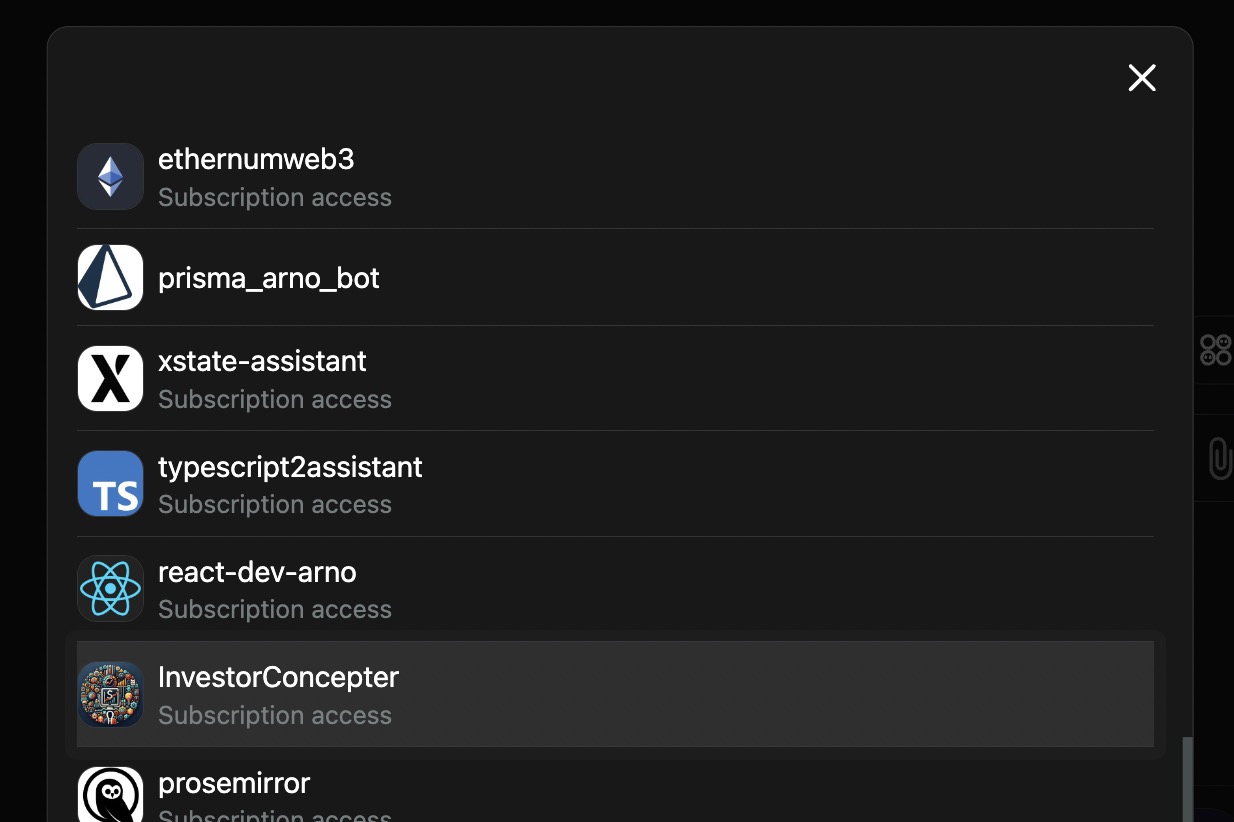

- use POE liked, Similar bots/ agents Creator to solve your repeated or domain-context related problems. I used to create lots of dev related bots to help me solve my dev problems:

- with simple

system prompt, we can create bot like simple-agent to work on specific domain tasks. - with some extra files as

Knowledgeto be retrieved as prompt context. - place your prompt inside your project source code such as

*.prompt.mdfiles, to give the context of code generation in convenience of future modifications and re-usages,

- with simple

Let's take my next.js + antd component generator bot for example, its system prompt is quiet simple:

1. use AntDesign Component Library to create a component

2. use TypeScript version 5

3. use React Hook Style

4. generate the text start with ```tsx and end with ```

5. do not use `export default` syntax, use `export const` instead

6. add `'use client';` on the first line of the code if user use next.js project

7. use `Typography` for basic text display

8. use `tailwindcss` as class utility for custom style if necessary(better without it unless you need to implement style yourself)

9. try to use `<Skeleton />` for loading state in the component render

10. **do output code without explanation**

Here are optional rules:

* if you are generate table component, you should consider table column width, eclipse text, and add some formatters if necessary

* if column width is not specified, you should use `auto` width and consider use scroll feature of table if table is too wide

* use the standard `useSwr` or `useMutation` from swr lib or `@tanstack/react-query` API if user required data fetching

Based on the context given below work:

<TSXCode>But it works perfectly to generate my project code like:

'use strict';

import React from 'react';

import { Descriptions, Skeleton } from 'antd';

import useSWR from 'swr';

import { MaasCodeBenchmarkReport } from './your-api-definition-file';

interface PressureTestReportProps {

benchmarkId: number;

}

interface BenchmarkMetrics {

avgGpuUsage: string;

avgGpuMemUsage: string;

avgCpuUsage: string;

avgMemUsage: string;

avgQPS: string;

avgErr: string;

avgRT: string;

}

interface PressureTestReportData {

benchmarkMetrics: BenchmarkMetrics;

benchmarkLog: string;

isGPUError: string;

minConsumption: string;

}

export const PressureTestReport: React.FC<PressureTestReportProps> = ({ benchmarkId }) => {

const { data, error } = useSWR(`/admin/maas/benchmark/report/${benchmarkId}`, MaasCodeBenchmarkReport);

if (error) return <Typography.Text type="danger">Failed to load data!</Typography.Text>;

if (!data) return <Skeleton active />;

const reportData: PressureTestReportData = data.data;

return (

<Descriptions layout="vertical" bordered>

<Descriptions.Item label="Average GPU Usage">{reportData.benchmarkMetrics.avgGpuUsage}</Descriptions.Item>

<Descriptions.Item label="Average GPU Memory Usage">{reportData.benchmarkMetrics.avgGpuMemUsage}</Descriptions.Item>

<Descriptions.Item label="Average CPU Usage">{reportData.benchmarkMetrics.avgCpuUsage}</Descriptions.Item>

<Descriptions.Item label="Average Memory Usage">{reportData.benchmarkMetrics.avgMemUsage}</Descriptions.Item>

<Descriptions.Item label="Average QPS">{reportData.benchmarkMetrics.avgQPS}</Descriptions.Item>

<Descriptions.Item label="Average Error Rate">{reportData.benchmarkMetrics.avgErr}</Descriptions.Item>

<Descriptions.Item label="Average Response Time">{reportData.benchmarkMetrics.avgRT}</Descriptions.Item>

<Descriptions.Item label="Benchmark Log">{reportData.benchmarkLog}</Descriptions.Item>

<Descriptions.Item label="Is GPU Error">{reportData.isGPUError}</Descriptions.Item>

<Descriptions.Item label="Minimum Consumption">{reportData.minConsumption}</Descriptions.Item>

</Descriptions>

);

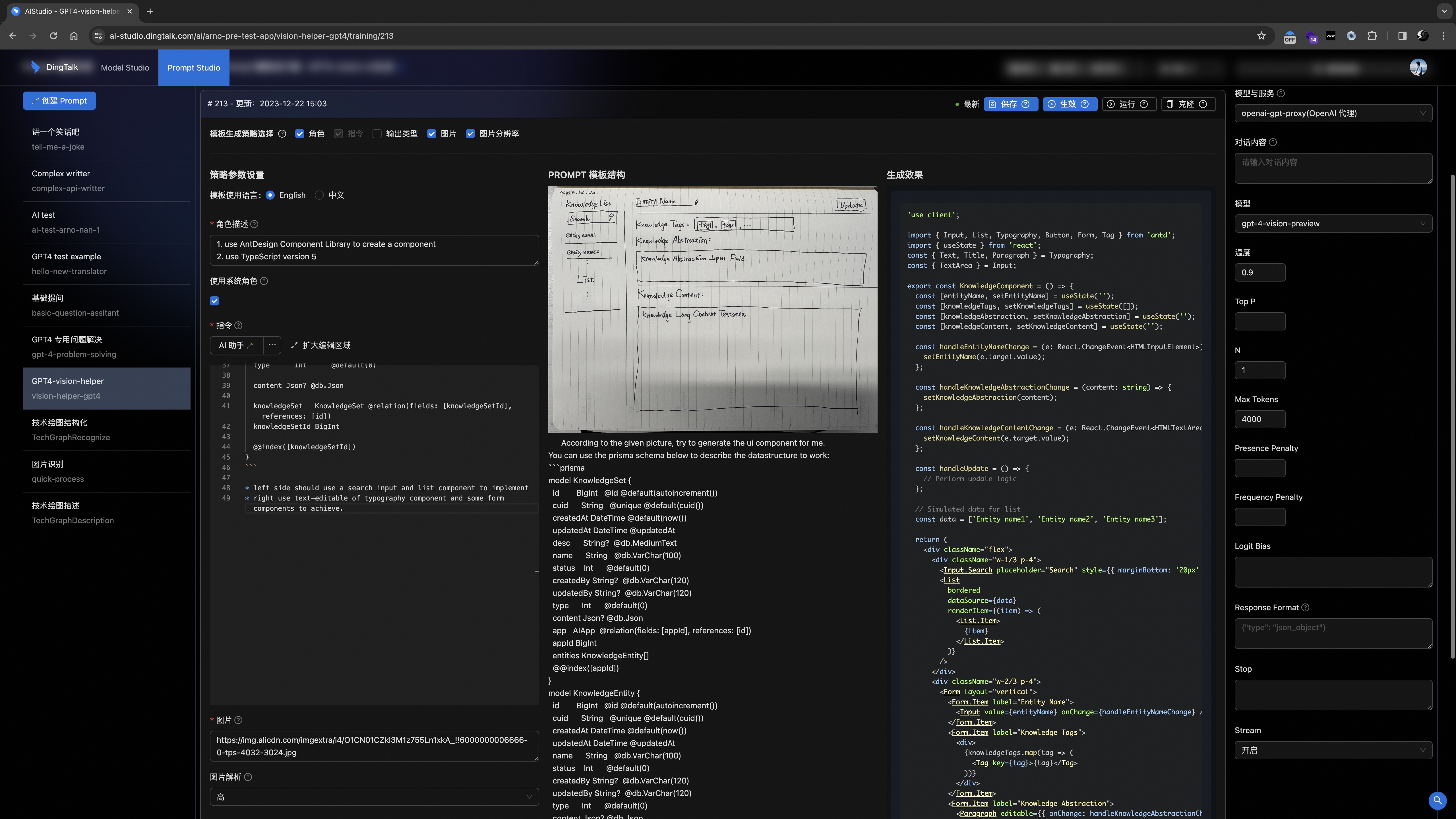

};- OpenAI's multi-modal AI APIs or similar API like Google's Gemini Vision to generate code with image in a simple way:

Take the manually draw UI image for example, we can use multi-modal AI to generate code for us, with GPT-4-Vision API.

Tools and Platforms

- Component based code generation platform like

v0.devby Vercel visual pattern components based.- These components and suitable for RSC(react server component) scenarios, it works perfectly with some simple visual design conditions.

- OpenAI GPTs with more complex agents like: tools / functions calls / file-context embedding / ... to make dev experience even better in some specific complex tasks.

- Byte-dance's Coze is another nice choice to replace OpenAI's GPTs.

Future Patterns

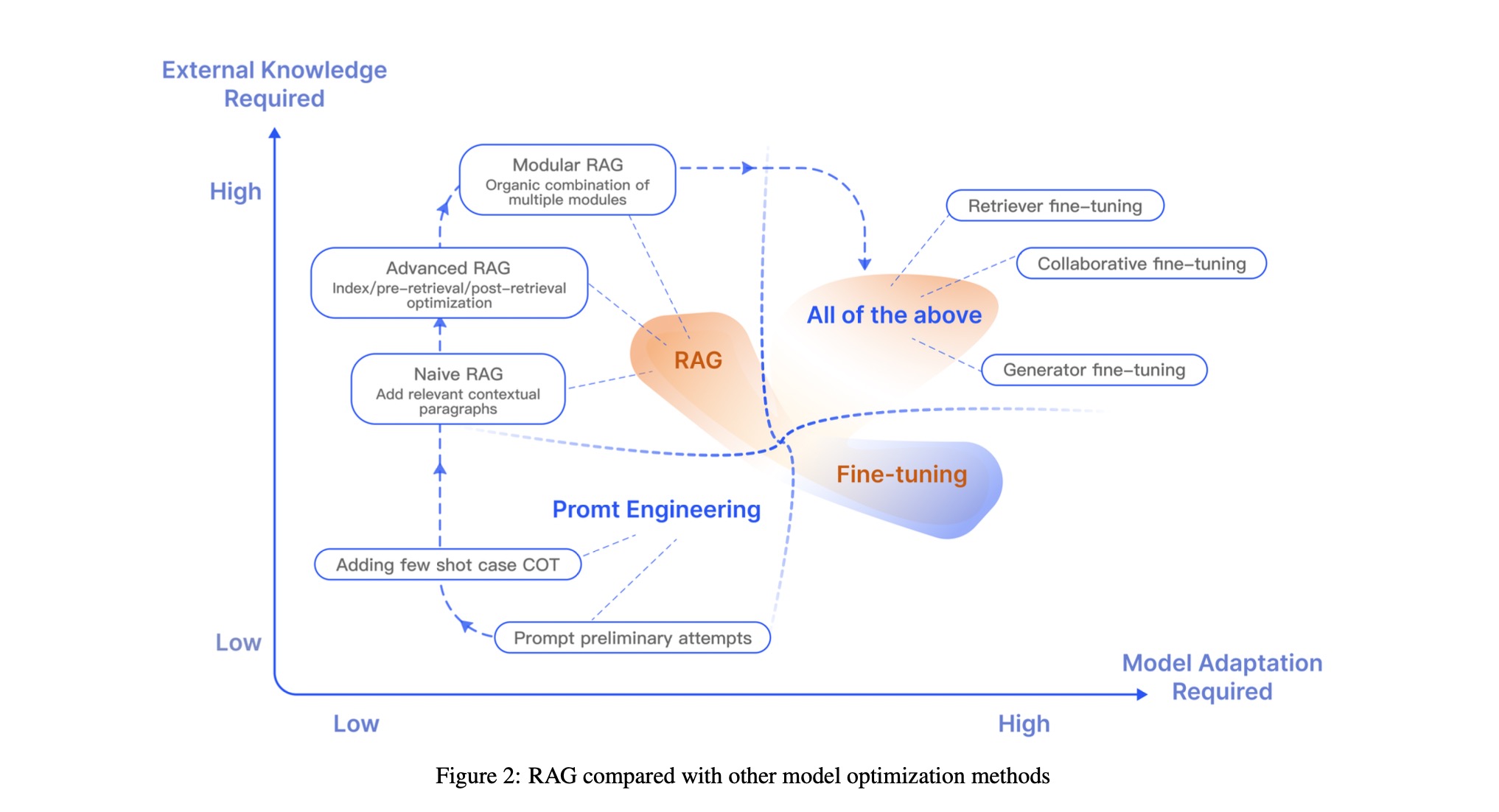

- Full RAG and Fine-tuning pattern, as enhancement for complex tasks. Use patterns of RAG to combine external knowledge as more accurate context to feed LLM for more accurate output, such as cases of code snippets, PRDs, Images and more structured data.

Image from paper of

Retrieval-Augmented Generation for Large Language Models: A Survey

- Semi-auto-meta pattern: human organize key strokes of multiple agents to complete a complex task with structured complex context (very matured AI pattern in the future) inside one

work in one workspace(like Notion) to structure the context and provide the context to AI agents to keep complex operations in one place. -> idea from elaboration studio of Arno. - Full-auto-meta pattern: multiple agents working together to complete a complex task with structured complex context (very matured AI pattern in the future).

References

- 🤖 Github Copilot: Github powered AI pair programming

- 🦄 POE: Chat bots for general usages of LLM

- ⚒️ v0.dev by Vercel: Low code integration with API invocation platforms which produce components based on pattern

- 💐 Arno's Prompts: Author's prompt garden, have fun with it 😎 ~

- WIP: ref to multiple modal AI

- WIP: ref to multi-agent AI